Google recently launched a service called Stadia that lets you play video games through the cloud, and experiences with the platform have been mixed. Some reviewers of Stadia have had the service work almost flawlessly for them. Others have been less lucky, experiencing comical amounts of latency and other technical glitches.

There has already been a lot of good discussion about Stadia's general business prospects, and about those of cloud gaming in general, but I wanted to focus on a particular aspect of the technical challenge facing Stadia - one I think will be especially important to whether cloud gaming lives or dies: network lag.

My pet theory is that people will tolerate lowish resolutions, weird compression artifacts, mediocre framerates, and all sorts of other issues, as long as their experience is snappy and responsive. But if it does this, people will run away screaming.

So it was quite promising to me when Stadia's VP of Engineering proclaimed that the service would have negative latency. The buzzword itself implied some kind of physically impossible time travel, but the real explanation was more modest:

"'Negative latency' is a concept by which Stadia can set up a game with a buffer of predicted latency between the server and player, and then use various methods to undercut it. It can run the game at a super-fast framerate so it can act on player inputs earlier, or it can predict a player's button presses."

I was intrigued. Could Stadia really predict inputs precisely enough, or run the game fast enough, to effectively "negate" latency? If so, why were players experiencing severe latency issues anyway?

To find out, I tried implementing some of these techniques myself. My results, at least so far, pointed less to any perfect "solution" of the problem and more to a bunch of tricks that only sort of work.

Disclaimer: this article was set up on a 60 fps display and may not work properly at different framerates. I hope to fix this in a future update.

I thought the best way to test these techniques would be through experimenting on an actual game - something simple, but with precise enough gameplay that any latency issues would be nice and frustrating. Meet our highly unoriginal test game, "Flapping Bird":

We'll work with this game throughout the article - first by "breaking" it through the introduction of some artificial network latency, then by trying to "un-break" it using some of the techniques Google and others have contemplated.

Like most other video games you have played before, the above game is being run and rendered entirely on your own device. This means that the latency is nice and low, but it also means that I can only show you as sophisticated a game as your device can actually run.

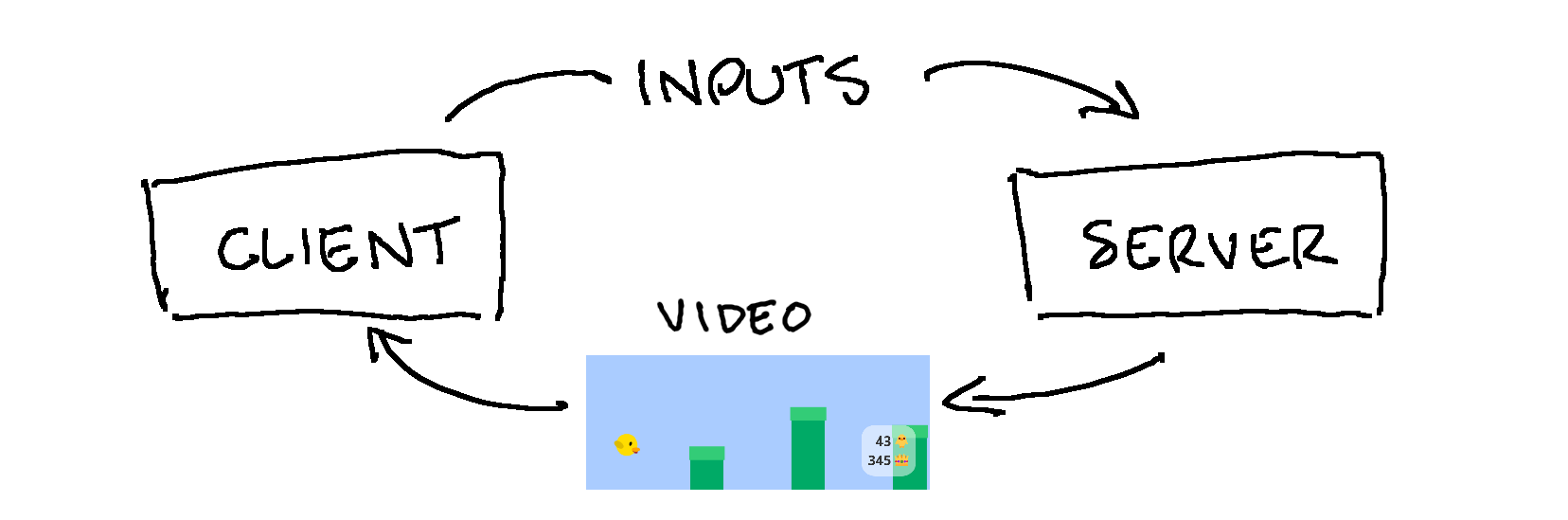

Cloud streaming flips this around by running the game on a computer somewhere else. Your device records your inputs and sends them to the game server, and the game server sends you back your video:

The benefit of this is that the game server can be as powerful and well-maintained as Google's money can buy. Your device only has to be powerful enough to stream video, which most modern phones and TVs can do just fine. However, your device and the game server now have to communicate with each other, and that communication adds a delay.

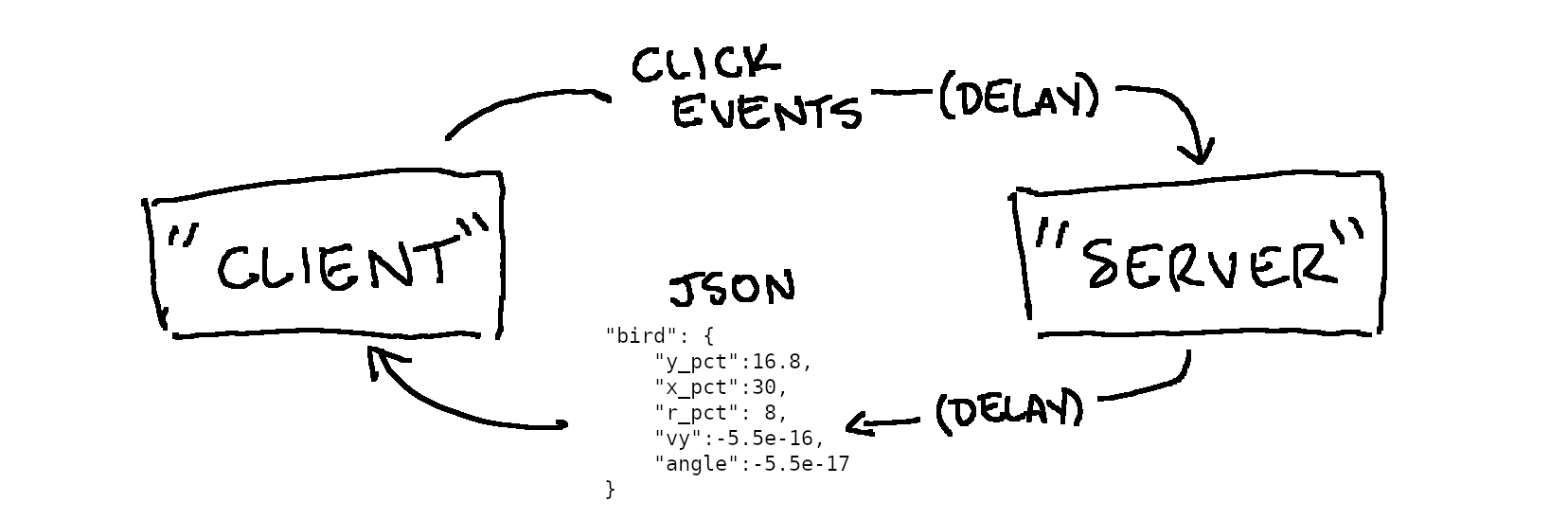

This article won't attempt to actually deal with how the video itself is encoded and sent, which is a whole separate challenge. Instead, our "video" data will just be some text: namely, a JSON string that describes each of the objects to be rendered. Also, instead of actually sending that JSON over a real network, we will just schedule in a delay before it "arrives."

On the other side, we will program a similar delay into "sending" our inputs. So there will be two separate delays: one for our inputs to travel to the server, and one for the video data to travel back. Putting it all together, our setup will look like this:

And here is the result:

The slider sets the total round-trip latency for the game. This total latency is (arbitrarily) split half-and-half between the inputs traveling to the "server" and the "video" data traveling back to you. Try it out, and see how added latency affects the game experience.

Now that we have latency in our system, we can start trying our various tricks for undercutting it.

The first trick mentioned was to "run the game at a super-fast framerate so it can act on player inputs earlier." Stadia would not be the first system to use this sort of technique: in particular, a programmer named Dwedit implemented a method called Run-Ahead for the RetroArch retro game emulator, and people have speculated that Stadia may be attempting something similar. Run-Ahead basically works like this:

So, if the game is expected to have 3 frames of lag, then it has to run the game forward 3 "speculative" frames for every 1 frame actually processed or displayed. In other words, the computer running the game has to run it up to 3 times faster than it would normally have to. (That said, actually rendering the video - usually the most compute-intensive step - only has to be done once.)

Let's see what that extra processing gets us. Here is a rough implementation of the Run-Ahead technique for our game:

My subjective experience is that this technique can sort of make low latencies (say, up to 200 ms) feel more responsive, but that it causes unbearable jittering at higher latencies. In particular, tapping the bird causes it to "snap" upward instead of hopping smoothly.

Why do we get this upward "snap"? It turns out that there is one big difference between using Run-Ahead to manage the kind of latency that it was developed for (i.e., emulating retro games), and using it to manage our cloud gaming setup here. In RetroArch, most of the lag happens after the emulator has already received the inputs. The emulator is then just waiting for the game to proceed - which is why it makes sense to speed the game up. In the case of cloud gaming, the inputs themselves have to travel to the server, so there is also some latency before the system even receives them. That means a few (bad) things for Run-Ahead here:

In other words, Run-Ahead alone won't solve our problem, since it was designed for a different kind of lag. What else can we do?

So we know that we can only get our game video from the server, and we know that we don't want to wait for our inputs to reach the server first. How could we see the effects of our inputs without waiting for the server? We could just have the server preemptively send us video data for a bunch of different cases, and have the client select the appropriate one based on the user's input.

For our little game, if there is only one frame of latency, you only need two cases: a case for if the user clicks, and a case for if they don't. If there are two frames of latency, you have to send four cases: two cases for the current frame, times two cases for the previous one. Extending this, we have to send 2N cases, where N is the number of frames of lag. If you are playing a 60 fps game with 200 ms latency, that means you will have about 12 frames of latency, which will require that the server pre-emptively send you 4,096 possible cases to select from. So you would need to do the equivalent of streaming 4,000 HD videos at once!

But we can reduce that complexity, at the expense of some accuracy. Instead of assuming the user can press the button every single frame, let's assume they could only have pressed it once during our lag period. That way, instead of 2N cases, we will only have to handle N cases: one for if they didn't click recently, one for if they clicked at this frame, one for if they clicked at the previous frame, and so on.

This is an extremely rough proof of concept, and making it really usable would take a lot more work than I've done here, but it shows - even if just for fleeting moments - that you can begin to compensate for even relatively high latencies with this technique.

The downside, though, is that even with our shortcut we are still processing and transmitting a large amount of data: for 200 ms latency at 60 fps, we are sending 12 versions of each video frame. Lots of home internet connections can stream an HD video just fine; fewer can stream 12 at once.

All of the above is for a very simple game with just one binary input: either we are jumping, or we aren't. How would this work for a more typical game, where the user has a whole controller full of different inputs they can use?

id Software has patented a possible way to do it: rather than sending every possible combination of all the different inputs, each of them gets associated with a "motion vector" describing how the video feed would change in response to that input. If a user has pressed multiple inputs, then the client can just add up the motion vectors.

This could work - it seems especially well-suited to first-person 3D games - but compositing the correct video from the motion vectors adds work that the client (your phone or computer) has to do. The more complex that composition, the harder the demands on the client - which could begin to erode the benefits of cloud gaming in the first place.

Finally, Google could simply try to predict the user's button presses and handle them beforehand. On one hand, if any group in the world has the machine learning chops to predict exactly how people would play a video game, it is probably Google. But on the other hand, this is a uniquely punishing problem.

Imagine you have a self-driving car: you tell the computer a destination, you flip the switch, and the car's computer begins making (and executing on) predictions for how you would drive. The car might occasionally behave differently than you would, but disagreement is no problem as long as the car behaves "normally" and ends up in the right place.

Now, imagine that you and the computer are both trying to drive the same car at the same time. Most of the time, you might agree, but occasionally, you and the computer will disagree on little details, like the exact timing and style of your movements. When that happens, the car will "jitter", first acting on the computer's faster input, and then reacting to your input when it comes in later. The car will constantly be juggling between your inputs and the computer's.

For a (horrible) example, here is a version where the server "predicts" your next click based on the interval between your last two clicks:

This example illustrates the worst possible outcome of input prediction: when the server predicts wrongly, even a little, it feels like you are no longer the one playing the game. The better the prediction algorithm, the less this problem will appear - but as long as the server can make inputs directly on your behalf, it will happen occasionally.

But one other interesting option might be to combine input prediction with the "speculative video" technique we explored above. If you can predict that a user's input is 99.999% likely to be one of 5 cases, then you only have 5 possible frames of video to send over, drastically reducing the amount of data you have to send. In the cases where the inputs can't be predicted, or the prediction is wrong, you would have some latency or jitter, but the server would never actually be making inputs "for" you.

We've seen a couple of techniques that people have speculated might be used to fight latency in cloud gaming. They are not without their drawbacks: some help manage the latency but add a lot of computing and networking cost, and some potentially create a gameplay experience even worse than the latency itself was.

While I expect the teams behind Stadia, Project xCloud, GeForce NOW, and the other cloud gaming services have worked much harder on techniques like these than I have, my biggest feeling right now is that trying to "negate" existing latency is probably less helpful than just trying to minimize it in the first place. Google's Stadia deep dive from Google I/O'19, for example, has a whole lot of discussion about how the network is designed to minimize lag, with relatively little about any tricks for negating that lag.

So, while I'm sure Google is hard at work on "negative latency", I wonder how much of that has made it to Stadia's launch so far. When people have complained about Stadia, they've complained about "ordinary" latency, rather than the kind of jitter that you can see in the examples above. I wouldn't be surprised if, at least for now, they are doing without the time travel.